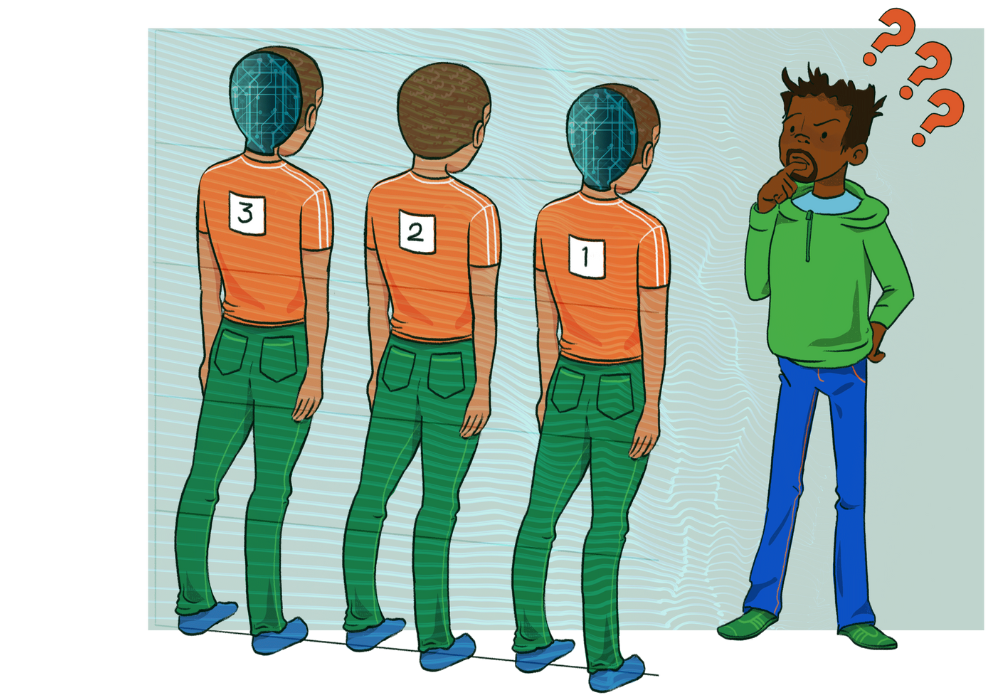

Synthetic media, often referred to as ‘deepfake’ content, may be something you are seeing more of online – even if you don’t realise it. As synthetic media becomes harder to detect with the rise of artificial intelligence technology, it can sometimes be challenging to work out what’s real and what’s not.

Synthetic media, often referred to as ‘deepfake’ content, may be something you are seeing more of online – even if you don’t realise it. As synthetic media becomes harder to detect with the rise of artificial intelligence technology, it can sometimes be challenging to work out what’s real and what’s not.

Synthetic media can be used in many different ways, making it a complex new form of content impacting online safety and digital literacy landscapes. Our Synthetic Media Hub is designed to help you and your communities understand synthetic media and the various forms of support available to guide parents, young people, and your communities through the essential online safety and digital literacy skills needed to address, identify and respond to synthetic content.

What is Synthetic Media?

Synthetic media is a relatively new concept, referring to forms of media generated with the help of artificial intelligence (AI). Known for being incredibly realistic, synthetic media can include various types of content, such as images, videos, audio, and text.

The technology used to generate synthetic media is often trained on existing, real content found online. This means synthetic media can appear realistic and may be difficult to distinguish from genuine media. Techniques used to create synthetic media include deep learning algorithms, such as Generative Adversarial Networks (GANs), which are particularly effective at producing realistic content.

Unlike non-synthetic media, which includes anything created directly by humans (such as a photoshopped image where a human manually edits a photo), synthetic media is fully generated by computers. Synthetic media can be used in many different ways, from entertainment and creative arts to more practical applications like virtual assistants and automated journalism.

However, synthetic media also poses risks. It can be used maliciously to create manipulated images or videos that can make individuals appear to say or do things they did not. This can have significant implications for privacy, security, and the potential spread of misinformation.

Synthetic media represents a powerful technological advancement, offering both creative possibilities and challenges. Understanding its capabilities and risks as this form of technology continues to evolve is important to ensure we stay safe online.

Synthetic Harmful Content

Synthetic harmful content refers to any type of media created or altered using AI with the intent to deceive, misinform, harm, or exploit individuals or groups.

Examples of synthetic harmful content include instances where AI-generated content is used to portray individuals as supporting a scam, to create fake news stories, to defame individuals, and to spread misinformation. For instance, synthetic videos or audio can make it appear as though a person is endorsing fraudulent schemes, damaging their reputation and potentially leading others to fall victim to the scam.

Synthetic Sexual Content

Synthetic sexual content refers to sexual or nude media created using AI, and is often referred to in the media as ‘deepfake’ content.

Examples of synthetic sexual imagery include images created by swapping someone’s face onto another person’s body, and ‘nudification’ apps, which can alter a clothed image to make it appear nude. In this context, a person’s image can be edited or altered to become an intimate image, or an intimate image can be manufactured to resemble someone else. This content can then be shared or in some cases sold by the perpetrator to cause harm and financial gain.

Terminology

Synthetic media refers to content created or modified using artificial intelligence (AI). As an emerging form of media, it has also been referred to as “AI-generated media,” “media produced by generative AI,” and sometimes “personalised media” or “personalised content.” In mainstream media, synthetic content (and synthetic sexual content) is often referred to as a “deepfake” when it involves the realistic manipulation of video or audio to alter a person's likeness or voice.

With this terminology in mind, you can read more about our decision to use the term “synthetic media” here.

The Impact of Synthetic Media

As with all of the topics we explore, synthetic media has its positives and negatives.

In a positive sense, synthetic media is changing how we watch and enjoy ourselves online and can be used in the videos and games we play, alongside giving us opportunities to use our imagination at the click of a button. There are plenty of ways synthetic media is used to make our experiences online more fun and exciting, whether by creating avatars or translating our voices into different languages. However, the use of synthetic media can create challenges and can be used to generate harmful content. This media can make it hard to tell what’s real and what’s not. As the impact of synthetic media grows, it’s important to learn how to understand and respond to it safely, helping us to stay safe online.

You may have seen in recent news, on the radio, or in your daily discussions that synthetic media is becoming a big deal. As with all new forms of media and the technology used to create it, there are plenty of stories about how it is being used, and the impact it is having on people, both in good and bad ways.

Benefits of Using Synthetic Media Technology

Synthetic media can offer several benefits, including the ability to create highly realistic and versatile digital content efficiently, enabling new possibilities in entertainment, education, and communication.

Helps creativity and problem-solving

Synthetic media can be used to help creativity and solve problems by making imaginative content and finding innovative solutions.

Increases Efficiency

Synthetic media makes it easier and faster to create lifelike and versatile digital content, helping to improve how we make things like movies, games, and educational tools.

Supports accessibility and inclusivity

By making it easier to create adaptable content, synthetic media increases accessibility and inclusivity for people, helping to translate information and ideas.

Negatives of Using Synthetic Media Technology

The potential negatives of synthetic media include the risk of misinformation, deepfakes, and the loss of trust in authentic content due to the difficulty in distinguishing between real and artificially generated media.

May generate deceptive or harmful content

Synthetic media can generate deceptive or harmful content by creating realistic but false representations of people, events, or information, which can mislead, harm or manipulate audiences.

Can be used to violate people's privacy

Synthetic media can be used to violate people's privacy by creating and distributing convincing yet fake depictions of private moments or personal information without consent.

Affects people's trust in what they see online

Synthetic media can affect people's trust in what they see online by making it harder to tell what's real and what's fake, which can make people doubt the truth of what they encounter on the internet.

Support and Advice for Managing Synthetic Media

There is plenty of support and advice available for schools, organisations and parents who need more information about synthetic media, deepfakes, and the help available. The following advice pages provide tailored support depending on your circumstances and needs:

Hub Resources

If you want to find out more about artificial intelligence and online safety, we have a series of resources you can access across our topic hubs and services.

Training

The Synthetic Media Hub covers support and considerations for young people, professionals, parents and carers. This media and the technology used to create it are evolving at growing rates, and it’s important to keep up to date with the online world. SWGfL training ensures that any audience can receive the latest guidance, support, and resources.

Find out more about SWGfL training

As part of the UK Safer Internet Centre, we run a range of additional training sessions ensuring support for professionals across the UK. Our Online Safety Clinics provide a further opportunity for professionals to hear from our experts. Find a variety of these sessions from the UK Safer Internet Centre website.

Articles

Synthetic media is constantly changing, keep up-to-date with the latest trends and guidance.