As schools and colleges across the UK continue to embrace emerging digital technologies in the classroom, ensuring that internet filtering and monitoring systems remain effective is more critical than ever.

As an example, recent updates to the Department for Education (DfE) guidance have reinforced the obligation on education settings to understand the capabilities and limitations of their filtering systems. In a rapidly evolving online environment shaped by generative artificial intelligence (AI) and dynamic content, this responsibility has never been more complex.

The Rise of Dynamic and AI-Generated Content

Generative AI technologies offer considerable opportunities in education—from supporting differentiated learning to fostering creativity and enabling personalised feedback. These innovations have the potential to transform classroom experiences, providing students with tailored support and teachers with powerful tools for engagement and assessment.

However, the nature of online content is evolving rapidly. Increasingly, what young people encounter online is no longer static or pre-curated, but dynamically generated in real time. “Dynamic content” refers to media—such as text, images, video, or audio—that is created or customised at the point of access. This can occur through generative AI platforms, real-time chat tools, social media environments, platforms that include user-generated content, or services hidden behind login walls, which are inaccessible to traditional cloud-based search or filtering systems. Even collaborative tools such as Office 365 or Google Docs may be used by learners to share inappropriate messages that could go undetected without real-time inspection. In contrast, static content (such as this article) remains unchanged regardless of who views it or when.

This shift poses a significant challenge for traditional filtering approaches. Historically, harmful content could often be managed by blocking access to known URLs or websites. Today, however, inappropriate material can be generated instantly, on demand, within otherwise legitimate platforms. For example, a generative AI tool or embedded video service might create harmful or inappropriate responses without that content ever existing on a classifiable domain.

As a result, schools and colleges must balance the benefits of these tools with a clear understanding of how their safeguarding systems operate—and, crucially, whether they are capable of identifying harmful content that emerges dynamically, or how else they manage this potential risk through complementary measures such as robust monitoring systems, user education, usage policies, and clear reporting and intervention protocols.

What the DfE Guidance Now Requires (England)

The Department for Education's updated guidance, which applies specifically to schools and colleges in England, was published in October 2024 as part of its digital and technology standards. It explicitly highlights the importance of understanding the technical capabilities of filtering systems. Within Standard 2, the guidance states:

You need to understand: … what your filtering system currently blocks or allows; technical limitations, for example, whether your solution can filter real time content.

This change reflects growing expectation within Government that effective safeguarding requires schools—particularly those choosing to use generative AI or other dynamic content platforms—to develop an informed understanding of whether their safeguarding systems meet their expectations and technology strategy.

This expectation is further supported by the DfE’s January 2025 publication Generative AI: Product Safety Expectations. While this document is non-statutory and applies only to schools and colleges in England, it outlines the Department’s anticipated standards for how generative technologies should be deployed in education. In particular, it states of filtering systems that:

We expect that: users are effectively and reliably prevented from generating or accessing harmful and inappropriate content.

This is a clear indication that the Government expects education providers to consider how harmful content is not only accessed but also created and prevented from being viewed.

Real-Time Filtering: What It Means and Why It Matters

Real-time filtering refers to a filtering system’s ability to analyse the live content of a webpage, stream, or digital interaction at the point it is accessed. Rather than depending solely on predefined URL lists or cached classifications, real-time systems inspect the actual text, media, and context of content to determine whether it should be blocked.

This is especially important when:

- Content is generated dynamically (e.g. through GenAI platforms)

- Harmful material is embedded within otherwise benign services

- Users are accessing content via encrypted or obscured channels that bypass static filters.

To better understand the difference, consider the analogy of a school alarm system. Traditional URL filtering is like a door sensor that alerts staff when a door is opened—useful, but limited to predefined access points. In contrast, real-time filtering is akin to a motion detector that monitors activity within the room itself. It responds to what’s actually happening, not just how someone entered. Without this capability, harmful content that appears within allowed platforms may go unnoticed, leaving schools exposed to risks they assumed were being managed. This has implications for safeguarding policy, staff training, and the effective implementation of the school’s online safety responsibilities under Keeping Children Safe in Education (KCSIE).

Supporting Schools to Understand Their Systems

To help address this gap in understanding, the UK Safer Internet Centre (UKSIC) is introducing an enhanced test within TestFiltering.com that allows schools to assess whether their filtering systems can analyse content in real time. This is a response not only to technological changes, but also to the updated expectations set out in Government guidance.

Our objective is not to single out any provider or promote any particular technological approach. Instead, it is to ensure that schools have access to clear, evidence-based information about the systems they depend on to safeguard children and young people.

Filtering providers vary in how they implement protection, and some may not currently offer real-time analysis. This is not necessarily a failing - but it is essential that schools are informed of such limitations and factor this into their safeguarding planning.

An Informed School is a Safer School

The updated DfE guidance for schools in England makes it clear: schools and colleges must understand what their filtering systems do and do not do. In a digital landscape transformed by generative AI and real-time content delivery, relying solely on traditional filtering methods may no longer be sufficient. Schools and colleges may determine that their traditional filtering solution is adequate when supplemented by monitoring technologies. In such cases, it is essential that a robust notification and intervention process is in place to respond swiftly and effectively if a child is exposed to harmful or inappropriate content.

Through the introduction of a real-time filtering test, SWGfL aims to support schools in fulfilling their obligations and building safer digital environments. We will continue to work with providers, the Department for Education, and safeguarding leads across the country to ensure that schools can confidently assess their systems in light of emerging risks and technologies.

How do I interpret the results of the Real-Time Filter test?

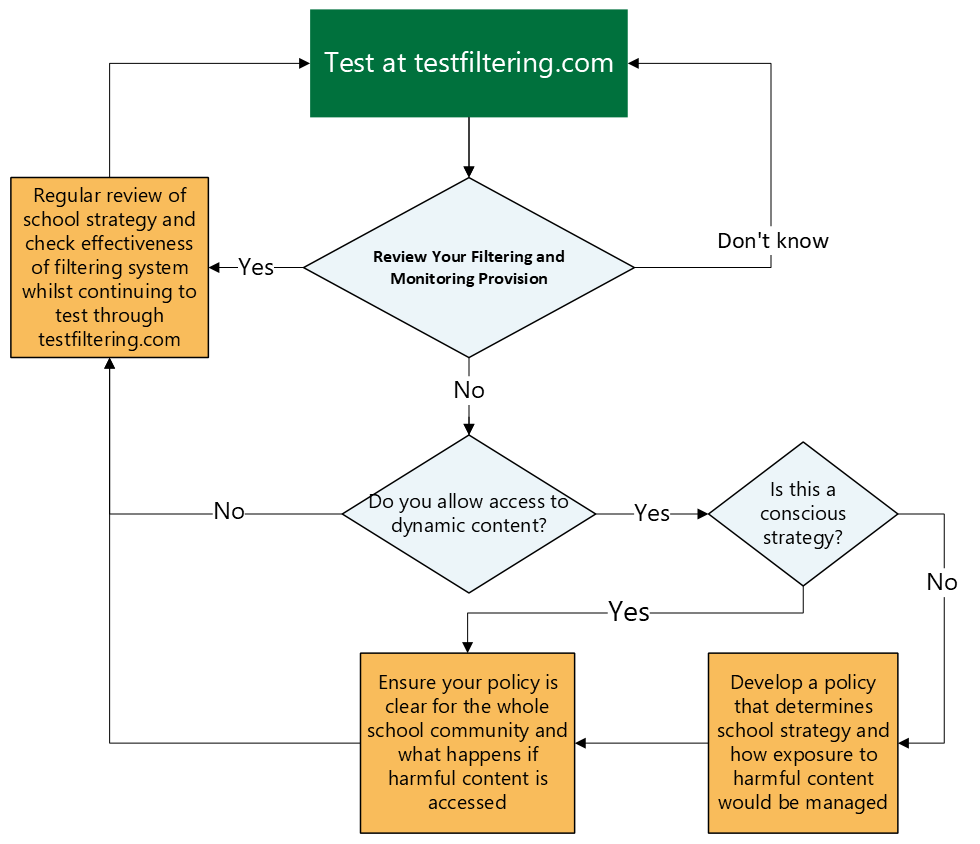

To help you understand what action you should take, we have produce this flowchart:

For more information about appropriate filtering and monitoring, and to access the most recent definitions, visit: https://saferinternet.org.uk/appropriate-filtering-and-monitoring