Meta has announced that they will begin labelling AI-generated images across Facebook, Instagram and Threads in a step towards increasing transparency across their services.

In a recent press release, Meta revealed that they have begun working with industry partners to develop common technical standards that can identify AI-produced content. These developments are expected to cover all AI-developed images made across some of the most well-known generative AI tools and are expected to also cover audio and video AI-generated content.

The company hopes to work alongside partners including Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock to develop this new technology capable of detecting AI-generated posts across users' Facebook, Instagram and Threads accounts. These changes are expected to be available in the coming months and will be supported across all available languages within the apps.

AI labelling

Meta’s latest announcement follows work to promote more transparency around AI-generated content from a series of companies and organisations. Concerns have been rising around the increasing accuracy of AI to generate realistic and believable content, with deepfake creations becoming increasingly easy to create and distribute across the web.

These latest changes aim to address the ever-growing concerns around AI advancement by promoting transparency and supporting users to know the difference between AI and human-generated content.

This initiative, launching in the coming months, looks to restrict the potential misuse of AI image generation tools and is part of a standardisation within the biggest AI and tech companies to help develop markers that recognise any AI-generated content.

How will AI Labels work?

The new tools aim to create a ‘signal’ or watermark that is embedded in any content developed through Meta’s AI tools and will place a visible marker on AI-generated images. Alongside this, the watermarks will be embedded within the generated image files to ensure that they cannot be easily removed or edited.

To support this, AI-generated content developed by other AI platforms, such as Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock, will also be flagged across Meta’s apps once they implement AI labels to their tools.

Find out more about AI

AI technology is continuing to develop at a rapid pace, and whilst these new measures are a step forward in identifying AI content, there is plenty more to understand about the benefits and concerns associated with AI.

Our AI Topic Hub is an ideal place to gain a better understanding and access guidance on the ever-increasing role that AI has, particularly within educational settings. The Hub provides insights, , guidance, and support, including free lesson plans about artificial intelligence to help students develop a better understanding of AI, made in partnership with SEROCU (South East Regional Organised Crime Unit).

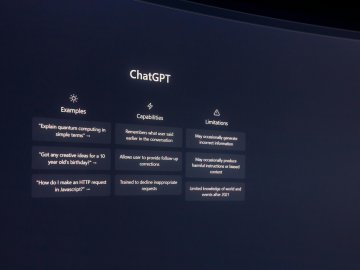

Alongside our guidance, Ken Corish has recently spoken on the Interface Podcast to explore the impact of AI and how platforms such as ChatGPT will impact educational settings.

In April, our next AI in Education Online Safety Clinic will take place (run as part of the UK Safer Internet Centre), addressing how teachers and professionals can shape future strategies with consideration to AI. Professionals can book their space to join this upcoming event here.