Meta has announced new features to support young people and families across Facebook and Instagram, encouraging people to be more conscious about how they manage their time on social media. The latest features will include additional parental supervision tools, messaging privacy features, and ‘Take a Break’ notifications.

Parental Supervision Tools

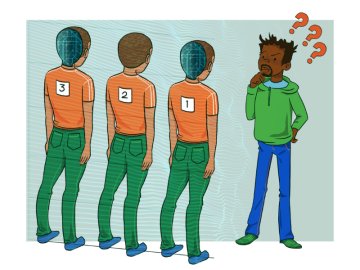

The updated parental supervision tools will enable parents and guardians to have more insight into how their child is using Messenger, with information on how much time they are spending on the app, their current privacy and safety settings, and updates on their contacts list.

It's important to note that these latest updates will not allow carers to read any messages young people send to each other, and instead supports young people to navigate online spaces with a degree of independence and safety in mind. Alongside this, young people will be able to notify any parental supervisors if they report someone on Messenger. The latest features will also allow carers to view if a young person changes their privacy settings, and gain insight into who can see their Messenger stories.

Similar parental supervision features will also be included on Instagram, encouraging young people to reach out to their guardians after blocking users. The new features are part of a rolling update from Meta to support their Parental Supervision settings in Meta’s Family Centre. Family Centre will aim to give parents and guardians unified access to resources and tools to help support their child’s online experience across Meta apps.

Messaging Privacy Features

Meta has also announced that they will be taking new measures across Instagram to protect people from unwanted interactions. The latest changes are currently being tested and are said to ensure that people will have to give permission to receive messages from people they don’t know.

Alongside this, message request invites will be limited to text only, ensuring that no one is able to receive photos, videos, calls, or voice messages from someone they don’t follow.

These new features will be in addition to Instagram’s current Safety Notices, which are in place when Instagram detects potentially concerning messages being sent by adults to young people. Currently, there are also measures in place to restrict adults from sending private messages to young people who are not following them.

Time Management and Wellbeing Notifications

New features on Facebook will notify young people after they use the app for over 20 minutes, similar to the current ‘Take a Break’ features on Instagram. The notifications will encourage young people to set daily time limits and take time away from Facebook.

Further notifications will also be implemented across Instagram to encourage young people to close the app while looking at Reels during the night. These features will expand upon Instagram's current ‘Quiet Mode’ feature, which is being rolled out globally, enabling people to turn off notifications and create auto-replies to show they’re in quiet mode and don’t want to be disturbed, to help set boundaries with their followers online.

Further Support

These updates are a significant step to protecting young people’s wellbeing and privacy online, however, it is important to consider these features as part of a wider approach to digital wellbeing, and further solutions should be considered to help protect young people against harmful online content and experiences.

To support young people’s experiences on social media, we have created a series of checklists in collaboration with Facebook, Instagram, and other social media platforms that can be used to guide social media users through their profile and privacy settings.

Report Harmful Content can also be accessed by anyone over the age of 13 to assist in reporting harmful material online. You can find out more about types of online harms and how to report them on the Report Harmful Content website. The national reporting center also provides further support to anyone who has already submitted a report to social media platforms and would like the outcomes reviewed.