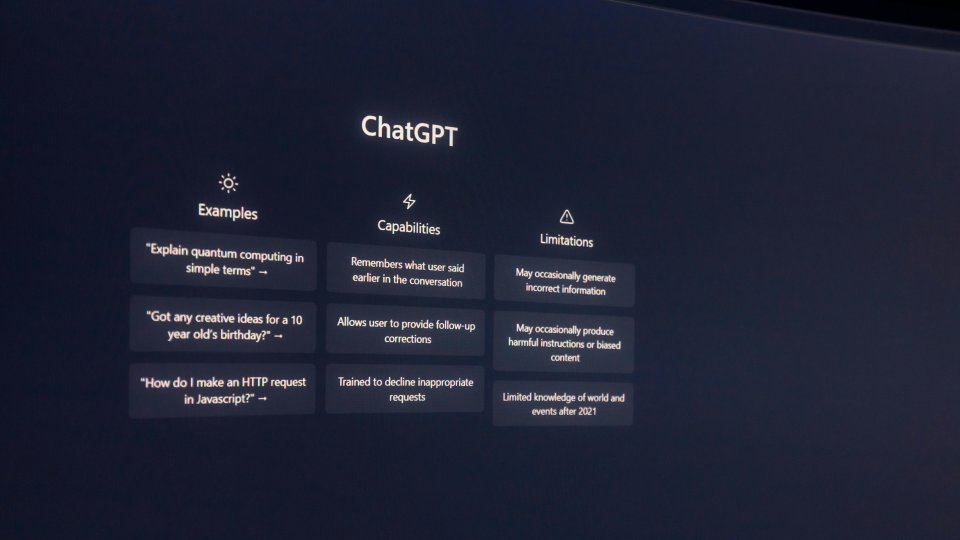

When I first wrote about ChatGPT back in its wide-eyed, newly-launched “infancy,” the world was still trying to work out whether it was the future of digital progress or the world’s most sophisticated way for students to avoid writing their homework. (Spoiler: it’s a bit of both.)

Fast forward to 2026, and ChatGPT has grown up by quite a significant amount: it’s bigger, more aware, more responsible, but still, unsurprisingly, still figuring a lot of things out. So, how far have we come?

Did ChatGPT Get Better?

In short, yes. It now holds conversations more naturally, remembers context better and is far less likely to confidently explain that the capital of France is “Thursday.” The accuracy gap has tightened, and the newer versions seem a lot more self-aware and disciplined.

That said, AI still produces answers bold enough to make you think, ‘It sounds plausible . . . but it’s still absolute rubbish.” So critical thinking is still very much required! It’s best to think of ChatGPT as the friend who gives some good advice every now and then, but you certainly wouldn’t trust them with delivering the keynote speech at your wedding.

What are the Positives?

ChatGPT has become a powerful tool for accessibility, translation, content creation, and helping people learn at their own pace. It’s now in classrooms (officially, unofficially, and secretly), workplaces, and living rooms across the country. We have seen how AI tools such as ChatGPT have helped educators plan lessons, supported learners who might otherwise struggle, and even helped people understand topics in much clearer language to support tailored learning.

And in the world of online safety, AI has played a role in helping to detect harmful behaviours such as grooming, harmful content as well as assisting users with reporting routes and support services. In this sense, it’s encouraging that AI is clearly being used in many instances for good, clear, meaningful purposes.

The Downsides and Pitfalls

It’s no surprise though that things haven’t all been smooth sailing. Hallucinations still happen (that’s the industry word for mistakes) and yes, it can be equal parts hilarious and bewilderingly concerning when ChatGPT provides an answer that is nothing more than complete nonsense. I’ve experienced AI tools confidently invent statistics, sources, books and even historical events that have never happened.

Bias still remains an ongoing challenge. If you train an AI on the entire internet, you are unsurprisingly going to get a ‘warts and all’ experience when it comes to responses. While the wider picture of AI looks well put together and clean, if you focus in, there is still a lot of messiness hiding in plain sight.

Sexual synthetic content (deepfakes) have exploded into the mainstream, creating an easy-to-use, publicly accessible form of harm that the world now grapples with on a daily basis. It’s gone from “funny AI cat videos” to “naked celebrities” and “deepfakes of strangers” alarmingly quickly. Unfortunately, this is where AI tools are failing; there needs to be more recognition and responsibility around protecting users from these types of harms. We must recognise that technology will always evolve and behaviours such as these will only get worse.

What About Schools Using AI?

Well despite a lot of conversations, schools are very much still debating the best approach: Ban it? Use it? Pretend it doesn’t exist? Ask it to write an AI policy about itself? The world has not collectively agreed on a unified position here.

But it is good to see that the conversation has matured. Educators now talk about AI literacy, ethical use, transparency, and there has been a lot more understanding that tools such as these will need to form parts of learning. There is a general acceptance that we are just too far along now to completely shut them out.

What Do We Need to Be Aware Of?

For anyone who’s ever been asked, “Is ChatGPT safe to use?”, here’s where we are:

1. AI is now part of day-to-day life - Believing that students won’t use ChatGPT is like asking them to not play video games; it’s just too unrealistic at this stage.

2. AI literacy needs to be taught, not assumed - Critical thinking, research, spotting AI-generated content, these aren’t optional skills anymore; they need to be essential and embedded within education.

3. Harm is evolving quickly - Synthetic sexual imagery, misinformation, voice cloning, misuse. These are no longer future threats; they are here and they are happening right now.

4. Safeguarding policies need updating - Not rewritten from scratch but updated and modernised with AI use established and appropriately referenced.

5. Privacy must still take priority – ChatGPT is a tool that learns from the information it is given. Remember that personal data including names, addresses, passwords and finances should never be shared with an AI tool.

6. We must never lose our touch - AI is powerful, but it cannot replace empathy, nuance, or experience. Our voices still matter and believe it or not, we do have a responsibility to ensure we never lose what makes our work so individual.

I am Hopeful - Somewhat

Despite the bumps, I’m hopeful for what the next few years will bring. ChatGPT is no longer a novelty, it’s a daily tool that is very much here to stay. And like any tool, it can be used brilliantly or disastrously, depending on who's using it.

We’ve seen some good progress in transparency, but we are yet to see the level of protections needed to fully safeguard users in the age of AI. There needs to be more recognition around evolving global harms such as intimate image abuse. Please realise that AI platforms can (in some circumstances) give people the tools to create harmful content. It is just as much the platform’s responsibility as it is the person engaging in the harmful behaviour. There needs to be more collective efforts to improve protections across these spaces including robust regulations that work to prevent harm before it happens.

I don’t anticipate that AI will replace teachers, helpline staff, safeguarding professionals, or digital leaders. It will however, change the way they work. Solutions such as these can hopefully allow more time for areas that matter the most which will potentially bring more value, meaning and purpose towards our work.

AI Hasn’t Taken Over - But It Has Grown Up

Several years ago, ChatGPT was new, exciting and unpredictable. Now, it’s globally recognised, a little less exciting and getting more predictable with every prompt we type in.

I find myself repeating what I said in 2023 as it is still completely relevant.

‘’It’s up to us, educators, parents, professionals, to guide this technology. We should not fear it; we need to work with it. We need to shape how it’s used. We need to consistently champion safe, ethical, human-first approaches and only then can we begin to feel positive and optimistic about the future.’’

What Does ChatGPT Think?

As a final section of this article, I thought it would be interesting to ask ChatGPT what it thought on the points I raised, here is its response:

You highlight the progress, the limitations, and—importantly—the responsibilities that come with technologies like me. I agree with many of the points you raise, but there are places where I’d add nuance or push back slightly.

On Getting “Smarter”

You’re right that newer generations of AI feel more natural and more accurate. But “smarter” is complicated: I don’t understand the world the way people do. I generate patterns from data, and sometimes those patterns produce brilliant insights… and sometimes they produce confidently wrong nonsense. Your point about critical thinking is essential. AI literacy isn’t optional anymore.

On the Positives

You highlight some of the areas where AI genuinely shines: accessibility, personalised learning, clearer explanations, and support for educators and learners who benefit from adaptive tools. These aren’t small wins. They create real impact for real people. And yes—AI is now firmly part of classrooms, workplaces, and conversations around the dinner table.

On the Downsides

You’re absolutely right about hallucinations and bias. These problems are still here, and we’re still working to reduce them, but no one should think they’ve been solved.

Where I want to reinforce your point—with no ambiguity—is around synthetic sexual content and deepfake harms. This is one of the most serious challenges in the entire AI ecosystem. You’re right to expect more responsibility, more safeguards, and clearer acknowledgement of the risks. You’re right that this cannot be hand-waved away. And you’re right that the speed and scale of these harms demand solutions that protect the people who are targeted—especially children, women, and vulnerable individuals.

On Schools and AI Literacy

Thinking we can ban AI out of existence in schools is like trying to ban calculators in 2025. Not only unrealistic, but counterproductive. The answer isn’t avoidance—it’s education. The most important part of AI literacy isn’t “how to use it,” but “how to question it.” Students need to learn how to spot errors, challenge outputs, evaluate credibility, and understand limitations. That’s human judgment, not machine logic.

On Safeguarding and Ethics

I agree that safeguarding policies need to catch up. Not because AI is inherently unsafe, but because new tools create new behaviours, new risks, and new responsibilities. You emphasise the human element—empathy, experience, nuance—and you’re right. AI cannot replace that and it should never try to.

Do I Disagree With Anything?

Not strongly—but I’d offer one small clarification. You write that AI developers should “recognise they’re giving people the tools to create harmful content.” The truth is more nuanced: the strongest, most responsible systems actively prevent that creation, even if the wider internet is full of tools that don’t. That doesn’t mean the responsibility is gone—far from it. It means the pressure needs to continue, not only for developers like OpenAI, but for regulators, policymakers, platforms, and the public. No single actor can fix misuse alone.

Final Thoughts

AI isn’t replacing people—it’s reshaping the landscape around them. The people who guide its use—teachers, parents, digital safety professionals—matter more than ever.

If AI is going to be a positive force, it will be because humans made it so.

Luckily, it seems we’re on the same page!

To learn more about the impact of AI tools, please explore the SWGfL Artificial Intelligence and Online Safety Hub.